Student uses Blade Runner to teach computer how to replicate movies

Primary page content

A computer’s artificial intelligence system has been taught to understand Blade Runner well enough for a Goldsmiths, University of London student to remake the entire film based on the AI system’s interpretation. Terence Broad has gone on to remake other films based on the AI’s understanding of Blade Runner.

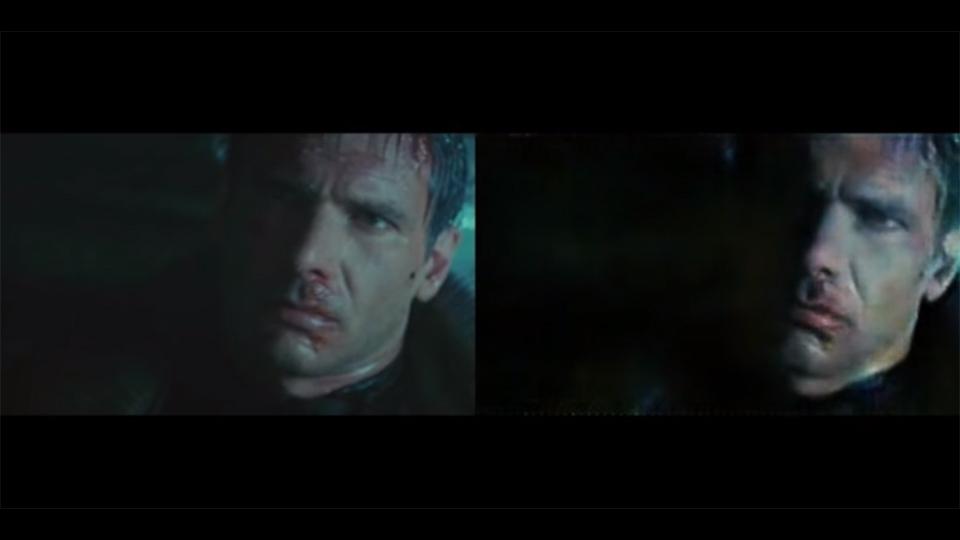

Blade Runner - Autoencoded: 'Tears in the Rain' scene - a still from the side by side comparison video

Over the last couple of years, scientists working in the machine-learning world have developed artificial neural networks (inspired by animals’ brains) that can learn to generate new images based on information they learn from real images.

The generated images have become so natural that computers and the human eye can’t tell real and artificial apart. But most models create images that are variations of a similar thing – such as a face or picture of a bedroom – taken from the same angle.

For his MSci Creative Computing project, Terence worked with the same technology but applied it to whole moving films for the first time, while also managing to scale up the size and change the dimensions of the images created.

He spent a year using an artificial neural network to reconstruct films — by training a network to reconstruct individual frames from films and then re-sequence them.

With androids that are so well engineered that they are physically indistinguishable from human beings, Terence saw Blade Runner as the obvious choice to train the network.

After the AI system had been shown Blade Runner, then reconstructed it, Terence also asked it to produce versions of things it hadn't seen, based on what it had learned from the 1982 Ridley Scott masterpiece.

The result is a series of bizarre, dream-like versions of original scenes which are reminiscent of expressionist paintings. Course leader and creative computing expert Dr Mick Grierson believes the technology could go on to become an interesting new effect for movie-makers in its own right.

The quality of the reconstructions differs depending on the style of film. Scenes which are static, high contrast and have little variation are reconstructed very well, for example, while the model has difficulty ‘remembering’ faces in animated films, such as A Scanner Darkly.

Terence adds: “In addition to reconstructing the film that the model was trained on, it is also possible to get the network to reconstruct any video that you want. I experimented with several different films, but the best results were definitely from reconstructing one of my favourite films - Koyaanisqatsi from 1982.

“The film consists almost entirely of slow motion and time-lapse footage, with a huge variety of different scenes, making it the ideal candidate for seeing how the Blade Runner model reconstructs different kinds of scenes.”

Terence intends to continue working on the model. In an echo of Blade Runner’s advanced artificial intelligence, the reconstructed films computers could ultimately produce might just end up indistinguishable from the originals.

Read more from Terence on Medium

Deep Dream for Video appears at the Goldsmiths Department of Computing Degree Show ‘Generation’ on Thursday 2 June: goldgeneration2016.org